I am a 4th year undergraduate student majoring in Computer Science and Economics with a minor in Statistics at UNC Chapel Hill. I work in the MURGe Lab where I am mentored by Prof. Mohit Bansal and Prof. Elias Stengel-Eskin. I am currently seeking PhD programs for Fall 2026.

My research focuses on building collaborative multimodal AI systems for monitorable LLM reasoning. Additionally, I am interested in using post-training methods for LLMs to improve interpretability.

🔥 News

- 2025.12: 🎉🎉 New preprint “Movie Facts and Fibs (MF^2): A Benchmark for Long Movie Understanding” introducing a benchmark for narrative understanding of long open-domain movies.

- 2025.08: 🎉🎉 Our paper “A Multimodal Classroom Video Question-Answering Framework for Automated Understanding of Collaborative Learning” was accepted to ICMI 2025!

- 2025.06: 🎉🎉 New preprint “Movie Facts and Fibs (MF^2): A Benchmark for Long Movie Understanding” introducing a benchmark for narrative understanding of long open-domain movies.

📝 Publications

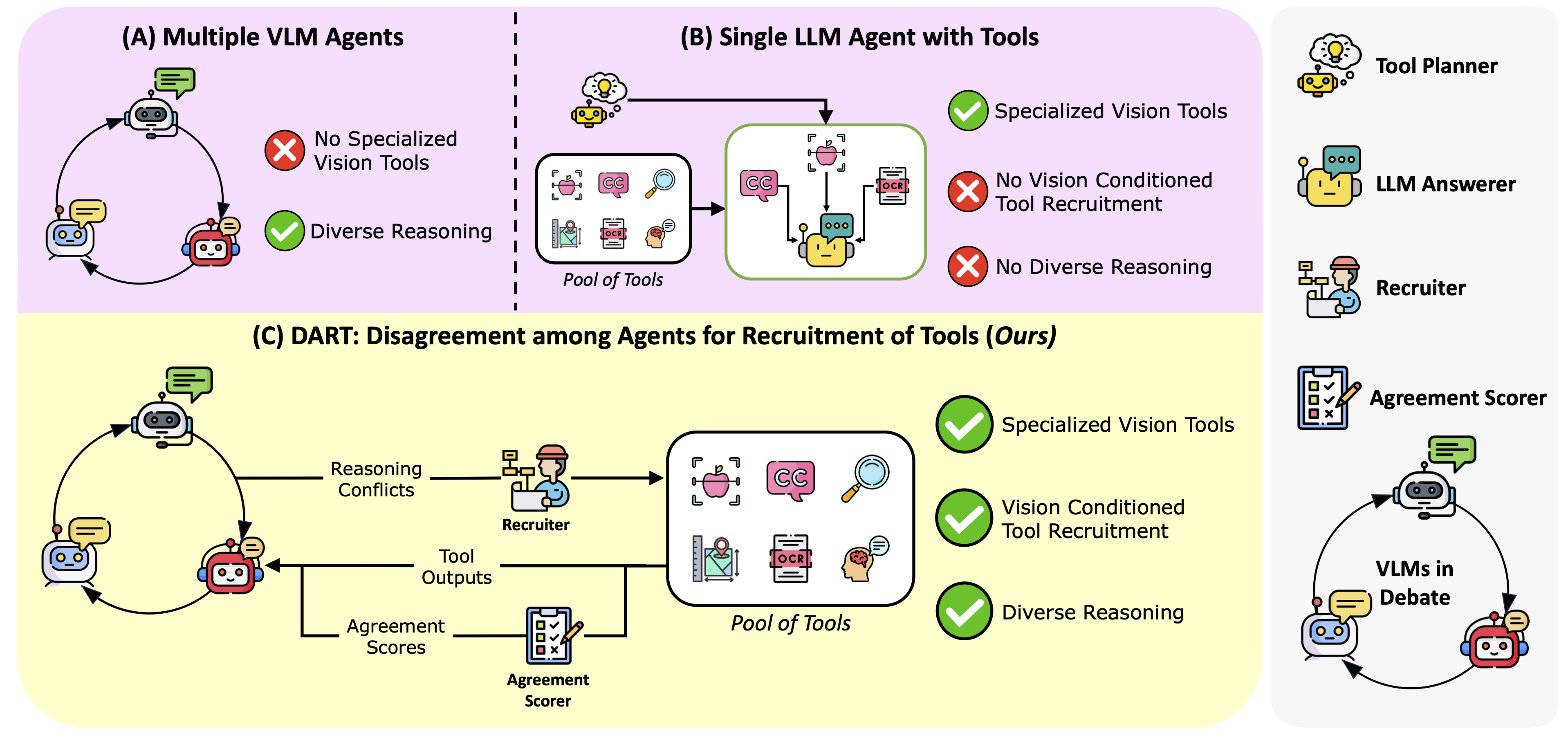

DART: Leveraging Multi-Agent Disagreement for Tool Recruitment in Multimodal Reasoning

Nithin Sivakumaran, Justin Chih-Yao Chen, David Wan, Yue Zhang, Jaehong Yoon, Elias Stengel-Eskin, Mohit Bansal.

- We propose DART, a multi-agent multimodal debate framework that uses disagreement between VLM agents to address visual uncertainty

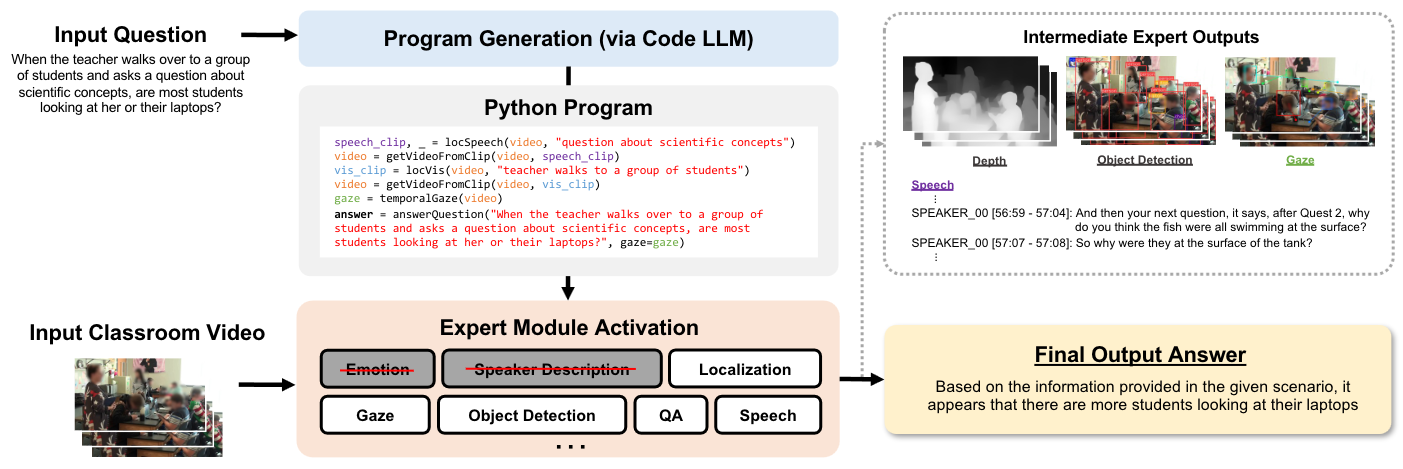

Nithin Sivakumaran, Chia-Yu Yang, Abhay Zala*, Shoubin Yu, Daeun Hong, Xiaotian Zou, Elias Stengel-Eskin, Dan Carpenter, Wookhee Min, Cindy Hmelo-Silver, Jonathan Rowe, James Lester, Mohit Bansal.

- We propose EngageVP, a new mutimodal video QA framework for stronger understanding of student engagement and behavior in classroom videos

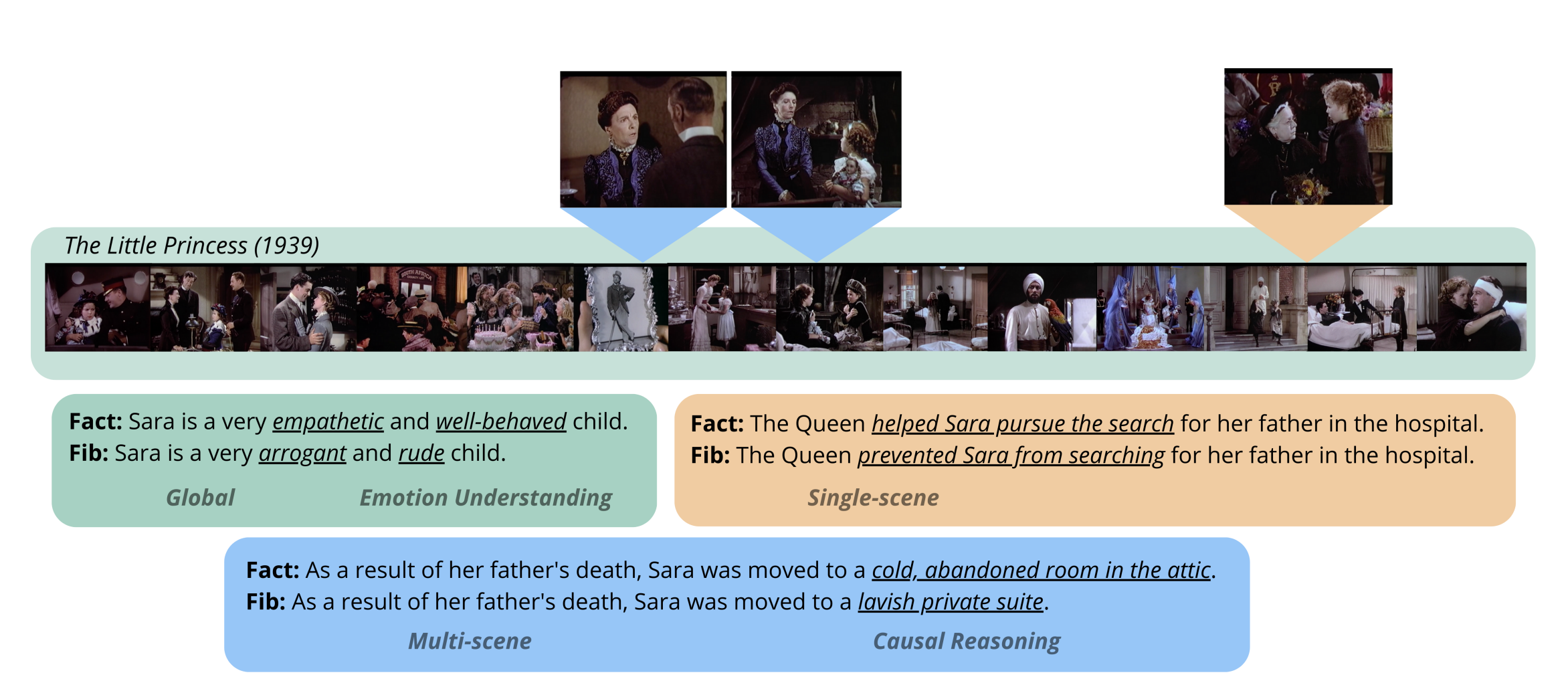

Movie Facts and Fibs (MF^2): A Benchmark for Long Movie Understanding

Emmanouil Zaranis, António Farinhas, Saul Santos, Beatriz Canaverde,…Nithin Sivakumaran, et al.

- We propose MF2, a new benchmark for evaluating whether models can comprehend, consolidate, and recall key narrative information from full-length movies

💻 Experience

- 2024.05 - 2024.08, NSF EngageAI Institute, Research Intern.

- 2023.05 - 2023.08, Principal Financial Group, Software Engineering Intern.